Putting the A-game into AR headsets

Ramya Sriram

6 min read

14th Sep, 2019

One of the biggest barriers to adoption of Augmented Reality is how processor- and thus power-hungry it is. Today, Focal Point’s D-Tail human motion modelling technology can dramatically increase the accuracy and decrease the battery load on existing AR systems.

AR headsets are of course vision-based technologies – they aim to overlay digital representations of information or an object on your view of the world. In order to do this successfully the headset needs to know a few key pieces of information:

- its offset from the area of interest in 3 dimensions;

- its orientation relative to that area of interest;

- and the rates of change of each of those properties (i.e linear and angular velocities).

There is no doubt that the best way to solve this particular problem of relative offset, relative pose, relative velocity and relative angular velocity is with the visual processing itself, as it is then possible to close-the-loop on the processing and project stages and provide stable and accurate imagery. But everything I have just written constitutes considerable processing overhead, and therefore considers size, weight, power and battery consumption. This is the reason that AR headsets today are not delicate glasses but rather entire helmets.

The future “vision” for AR is still very far from reality

An over-reliance on vision to provide all of an AR-systems’ capabilities, including its understanding of its location and orientation in the world, is its blind spot – and will limit the market size until this problem is solved. Fundamentally people want to be like Iron Man without having to look like Iron Man.

The trick to reducing the size, weight, power and battery consumption of these platforms is to use a significantly lighter-weight method of understanding motion through space than using vision. An AR system needs to only enable visual processing at the final stage of the Augmented Reality process – the part where it overlays information into the real world. Using vision to continuously update your knowledge that you are not currently in a location and orientation to provide AR information overlays is a terrible idea, especially if a more lightweight method is available. So for example, picture a world where your normal glasses are actually smart glasses which overlay prices and discounts on particular objects in particular tech-savvy stores (Apple, Tesla, etc). Your smart glasses are activated when they are near and facing regions of AR data, so that they can enable visual processing and search the scene for QR codes or other fiducials in the area to provide the tag to overlay the AR data as required. But the 99% of your time spent away from such data-objects means you shouldn’t be continuously running a visual navigation system. There is a much skinnier alternative; D-Tail.

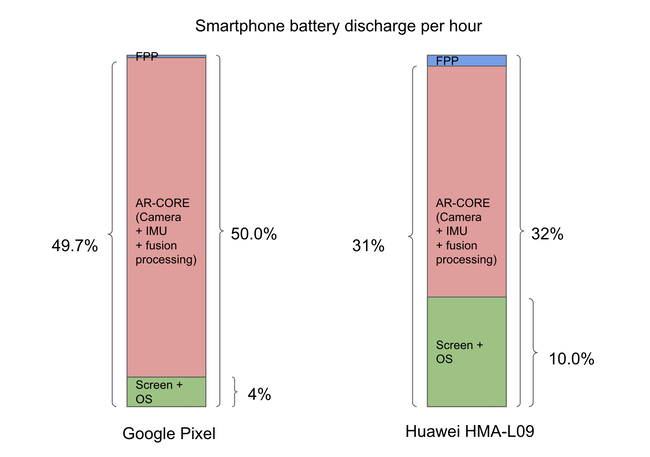

Processing the accelerometer, gyroscope, and other sensor data within a world-leading human motion modelling system allows Focal Point to provide an understanding of motion through space at much lower battery consumption than AR headsets. Our trials against AR Core have demonstrated that D-Tail has between 30x and 50x lower battery consumption than this flagship vision-based location technology. The consumption difference depends on which smartphone the test is carried out on – AR Core’s battery consumption varies across different devices.

This movie demonstrates the performance of D-Tail’s camera-free tracking system. The demo app shows a green arrow representing the motion of the user through space, and a yellow speed bar.

- The first scene in the movie shows how a simple example of rotating the phone in the hand and covering the camera does not disrupt the ability of D-Tail to correctly reflect the actual motion through space. There is a one second lag in the processing, which is a feature of the human motion modelling system. But this is not an issue for a system which is designed to be used to simply confirm whether the AR system is in the wrong location and pose within range of an AR location, within one second of being near that AR location and looking towards it; the visual system would be activated and powered up so that the AR information is delivered using visual processing with the lag limited by the visual technology instead.

- In the second scene of the movie the ability of the system to correctly account for side and back steps is demonstrated. A difficult challenge for inertial-only tracking systems, but D-Tail easily handles complicated human motions.

- In the third scene D-Tail is compared against ARCore. When the camera is able to see the scene ARCore has similar tracking performance to D-Tail. However when the camera is obscured temporarily ARCore breaks and cannot continue to track location correctly. When the view of the scene returns the location and pose provided by ARCore are both incorrect for the remainder of the walk, but the D-Tail data is correct.

AR Core and similar visual tracking systems are seriously impacted by camera obscurations, lack of lighting, looking at the sky, looking directly into bright lights, or being part of an environment with lots of moving objects and few static objects. Integration with a robust inertial human motion modelling system like D-Tail is critical to providing robust low-battery-consumption AR technologies.