A complete guide to ADAS sensors

Ramya Sriram

16 min read

19th Nov, 2024

Automotive

ADAS (Advanced Driver Assistance Systems) refers to a suite of electronic systems in vehicles designed to aid with driving tasks, enhance safety and improve overall driving efficiency. ADAS uses various sensors, cameras, radar, and software to detect surroundings, assess potential hazards, and help the driver make decisions or take actions to avoid accidents or maintain safe driving. ADAS is a key stepping stone in the evolution of autonomous cars.

The ADAS and AD (Autonomous Driving) market is experiencing rapid growth due to increasing demand for vehicle safety, convenience, and the push toward autonomous driving.The automotive software market is projected to more than double in size from $31 billion in 2019 to roughly $80 billion in 2030—a CAGR of more than 9%, McKinsey reports. ADAS typically refers to partial automation and assists the driver with specific tasks, enhancing safety and reducing workload, while AD aims for full vehicle automation, potentially eliminating the need for human drivers in the future.

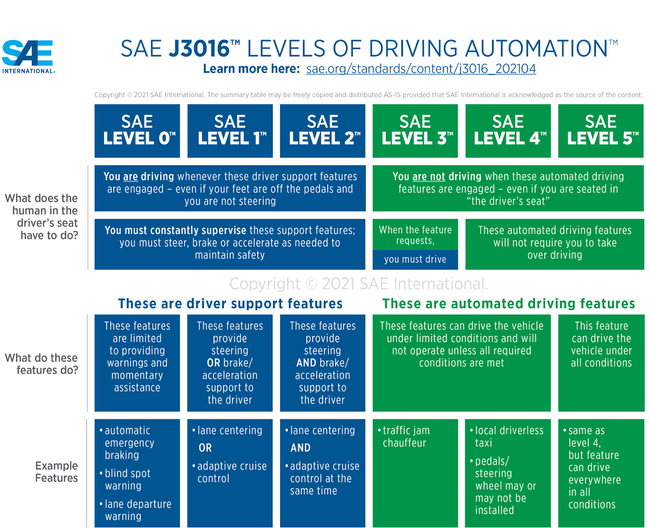

Levels of driving automation

Before we explore the sensors used in ADAS systems, it’s useful to understand the six levels of autonomous driving, as defined by the Society of Engineers. They categorise vehicles based on the degree of automation, ranging from Level 0 (no automation) to Level 5 (full automation).

SAE Levels of Driving Automation

As automakers move towards developing autonomous vehicles, the ADAS market is growing rapidly. Some OEMs like Mercedes-Benz have reached Level 3 “eyes-off” autonomy and several countries have legalised A3 autonomous driving on highways. At Level 3, in some limited scenarios (known as the Operational Design Domain or ODD), such as on highways, the car can assume control. While the driver does not need to hold the steering wheel, or be paying attention, they must be ready to resume control if the car requests it. Waymo is an example of a Level 4 autonomous vehicle implementation, which means driving is fully autonomous in specific or limited conditions, such as a defined route or area. In Level 4 autonomy, the driver need not be ready to intervene. Level 5 would mean fully autonomous driving under all conditions, with no steering wheel or pedals required.

Types of ADAS sensors

Automated driving depends on a suite of sensors, typically including GNSS receivers for absolute positioning, as well as cameras, radars and LiDARs that allow the vehicle to perceive its surroundings and make smart decisions regarding braking and lane positioning. Let’s examine each of these in detail.

Cameras

ADAS cameras are sensors that provide visual data about the environment and help drivers/vehicles detect, identify and recognize objects around them, such as pedestrians, other vehicles and traffic signs. Cameras are strategically placed in the vehicle to cover key areas: the front camera helps detect lanes and pedestrians; the rear camera aids with parking; side cameras help with blind spot monitoring; and 360 degree cameras provide a bird’s eye view. Front camera systems are currently widely used.

The main functions of ADAS cameras are:

Object and lane detection

Cameras can detect traffic signs, pedestrians, and other vehicles that can help with avoiding collisions, speed adaptations and improved safety. They can detect road markings, which helps with lane-keeping assist (LKA) and lane-departure warning (LDW).Trigger responses and safety alerts

Cameras capture images that are processed by software to trigger responses to improve safety, such as automatically initiating emergency braking, alerting the driver of a blind spot, or helping with parking.Colour and texture detection

Unlike radar and LiDAR, cameras can identify colour (e.g., traffic lights) and textures (e.g., road surface conditions), enhancing scene understanding for more complex environments.

Object and lane detection

Cameras can detect traffic signs, pedestrians, and other vehicles that can help with avoiding collisions, speed adaptations and improved safety. They can detect road markings, which helps with lane-keeping assist (LKA) and lane-departure warning (LDW).

Trigger responses and safety alerts

Cameras capture images that are processed by software to trigger responses to improve safety, such as automatically initiating emergency braking, alerting the driver of a blind spot, or helping with parking.

Colour and texture detection

Unlike radar and LiDAR, cameras can identify colour (e.g., traffic lights) and textures (e.g., road surface conditions), enhancing scene understanding for more complex environments.

Radar

Radars have an important role to play in the ADAS system, working to enhance perception of the surrounding environment. Radar is a sensing technology that works by sending out radio waves and measuring how long it takes for them to bounce back after hitting an object. Radar can help with a variety of safety features, including:

Object detection: Radar is used to detect objects in the vehicle’s surroundings. It works even in poor visibility, darkness or adverse weather conditions like rain, fog, or snow. This is a major advantage of radar over cameras.

Speed measurement: Radar can calculate the relative speed of objects (e.g., other cars or obstacles) by measuring Doppler shifts in the reflected signal. This is crucial for adaptive cruise control and collision avoidance systems.

Range: Radar can detect objects at longer ranges (250 metres or more), making it ideal for high-speed scenarios like highway driving.

Object detection: Radar is used to detect objects in the vehicle’s surroundings. It works even in poor visibility, darkness or adverse weather conditions like rain, fog, or snow. This is a major advantage of radar over cameras.

Speed measurement: Radar can calculate the relative speed of objects (e.g., other cars or obstacles) by measuring Doppler shifts in the reflected signal. This is crucial for adaptive cruise control and collision avoidance systems.

Range: Radar can detect objects at longer ranges (250 metres or more), making it ideal for high-speed scenarios like highway driving.

Radars are mounted on the front and rear to monitor and detect objects in the surroundings, whereas radars inside the car monitor passenger behaviours. Radar mounted at an angle enables a wider view of the surroundings, improving coverage for blind spots, or vehicles in adjacent lanes.

LiDAR

LiDAR (Light Detection and Ranging) is a remote sensing technology that uses laser light to measure distance and make 3D maps and models of the real world.

Vehicles can use both electromechanical LiDAR and solid-state LiDAR. Electromechanical LiDAR features moving parts for high-resolution scanning and is often used in advanced autonomous systems. However, it can be quite bulky and expensive. In contrast, solid-state LiDAR has no moving components, making it more robust, compact, and cost-effective, leading to its increasing adoption in consumer vehicles. An example of this is BMW’s series 7 that uses Innoviz’s solid-state LiDAR to enable some of its L3 automated driving features.

Applications of LiDAR in ADAS include:

Precise mapping: LiDAR uses laser pulses to create a highly accurate 3D map of the vehicle’s surroundings.

Obstacle detection: LiDAR can detect smaller objects and differentiate between them (like pedestrians, vehicles, cyclists) due to its high spatial resolution.

Precise mapping: LiDAR uses laser pulses to create a highly accurate 3D map of the vehicle’s surroundings.

Obstacle detection: LiDAR can detect smaller objects and differentiate between them (like pedestrians, vehicles, cyclists) due to its high spatial resolution.

One of its significant advantages over cameras and radar is its wide range: LiDAR can effectively detect objects from distances of up to 200 metres or more, allowing it to monitor a broader area ahead of the vehicle.

MEMs and IMUs

MEMs ((Micro-Electro-Mechanical Systems) technology includes small, low-power sensors such as accelerometers, gyroscopes, pressure sensors, and more. They can measure various physical phenomena like acceleration, rotation, and pressure.

In the automotive context, an Inertial Measurement Unit is a specialised sensor system that integrates multiple MEMS sensors, primarily accelerometers and gyroscopes, to measure a vehicle’s motion and orientation. IMUs provide comprehensive motion data, allowing for precise tracking of a vehicle’s position, speed, and direction, which is essential for advanced safety features and navigation systems. Their applications in ADAS include:

Motion tracking: IMUs measure acceleration and angular velocity, allowing ADAS to track the vehicle’s movement accurately, including speed, direction, and tilt.

Enhanced Localisation: IMUs complement GNSS data by providing high-frequency motion updates, improving the vehicle’s localization accuracy, especially in challenging environments like tunnels or urban canyons where GNSS signals may be weak.

Stability control: IMUs contribute to systems like Electronic Stability Control (ESC) by monitoring the vehicle’s orientation and detecting skids or loss of traction, enabling timely driver warnings. They also help autonomous systems maintain vehicle orientation and stability during dynamic manoeuvres.

Motion tracking: IMUs measure acceleration and angular velocity, allowing ADAS to track the vehicle’s movement accurately, including speed, direction, and tilt.

Enhanced Localisation: IMUs complement GNSS data by providing high-frequency motion updates, improving the vehicle’s localization accuracy, especially in challenging environments like tunnels or urban canyons where GNSS signals may be weak.

Stability control: IMUs contribute to systems like Electronic Stability Control (ESC) by monitoring the vehicle’s orientation and detecting skids or loss of traction, enabling timely driver warnings. They also help autonomous systems maintain vehicle orientation and stability during dynamic manoeuvres.

Read more about the applications of IMUs in positioning for AV’s.

GPS/GNSS

GNSS (Global Navigation Satellite System) is a satellite-based technology that provides accurate positioning, navigation, and timing services worldwide. In the context of ADAS, GNSS provides vehicle positioning by using satellite data, enabling accurate navigation and real-time location tracking. Although commonly known as “GPS”, this refers to the US GNSS constellation; other major constellations include Galileo (EU), Beidou (CH) and GLONASS (RU).

GNSS is the only PNT sensor that can provide an absolute rather than relative position.

Read also: GNSS challenges for ADAS engineers: Enabling lane-level accuracy in difficult environments

PNT in ADAS and AD systems is a safety-critical function, so it must work as expected at all times. For that reason, ADAS and AD systems can’t rely on GNSS alone, and instead use GNSS as one of an array of sensors that contribute to accurate, reliable and continuous PNT. GNSS plays a unique role within such multi-sensor positioning systems, contributing in multiple ways, including:

Absolute positioning: GNSS measurements form a key input for real-time localisation, helping the ADAS determine the vehicle’s exact position and trajectory. Once an absolute position is established via GNSS, the other PNT sensors can then position the vehicle within the lane to the required level of accuracy.

System initialisation and calibration: The global position provided by GNSS can be used to accelerate the vehicle control system’s initialisation process. While HD maps can provide a high-accuracy position, they can only do so efficiently if they know the vehicle’s rough starting location. Without that information, they would need to search the whole global database to find a match with what the camera and LiDAR are seeing: a time, data, and compute-intensive process.

An accurate GNSS position can also be used to calibrate other sensors to maintain their own accuracy. In particular, IMUs and MEMS sensors tend to accumulate biases over time that means their accuracy starts to drift. These sensors can be regularly re-calibrated by using the GNSS position to correct any biases that have crept in.

Geofencing: ADAS and AD features typically include geographical limits and so must be able to tell when it is entering and leaving an area where it is not authorised to operate. This is typically achieved using geofencing, which uses GNSS positions to define authorised zones in the system’s operating software. When the GNSS receiver determines that the vehicle is leaving an authorised zone, it can hand over operation to a human driver. Accurate GNSS data is therefore crucial for geofences to work.

GNSS provides a common reference frame for vehicle-to-vehicle and vehicle-to-infrastructure communication (collectively known as vehicle-to-everything or ‘V2X’). GNSS allows vehicles to share their exact position, speed, and direction with other nearby vehicles. This can enable systems like collision avoidance, platooning, and cooperative lane changes, as all vehicles can interpret one another’s position relative to the same global reference.

For more information on the role of GNSS in ADAS, download our comprehensive white paper.

HD Maps

While technically not a sensor, HD maps are worth mentioning as a technology that’s becoming increasingly important in the ADAS ecosystem. HD maps (High-Definition maps) in ADAS provide highly detailed and accurate information about road environments, including physical data like lane markings, traffic signs, and road geometry, but also meta-data like speed limits and driving rules. These maps are essential for enabling advanced features like lane-keeping, adaptive cruise control, and autonomous driving by giving the vehicle precise, real-time context beyond what onboard sensors can detect. HD maps work in conjunction with LiDAR, radar, cameras, and GNSS to provide precise localization, helping the ADAS system make informed driving decisions. Some of the companies operating in this space include TomTom and Dynamic Map Platform (DMP).

While HD maps can be highly accurate, there are also several challenges including high cost and complexity. Creating and maintaining HD maps is resource-intensive, requiring specialised mapping vehicles. Keeping HD maps updated in real time, especially in dynamic environments with road changes, construction, or traffic, is difficult and requires constant monitoring and revision. The risk of these maps being out of date also means that autonomy systems must have mitigation strategies in place to enable it to fail safely.

Future trends

As individual ADAS features evolve toward products with higher levels of autonomy, there is increasing pressure to develop efficient and cost-effective systems. To achieve this, future trends focus on maximising the utility of each sensor and optimising overall system performance. This requires tough choices on the selection of sensors, evaluating their relative benefits and flaws, while also providing redundancy for safe and continuous operation. With the right hardware in place, software is critical to compare inputs and decide where to place trust. With an overwhelming wealth of data incoming, Machine Learning is fast becoming the tool of choice to determine the right actions.

An alternative to traditional (and costly) hardware upgrades is software algorithms, resulting in better processing of data, allowing us to extract more precise information from existing hardware, rationalising the sensor suite and minimising costs.

Additionally, reducing the reliance on cloud computing for real-time decisions will be essential to cutting down on latency and cloud infrastructure expenses. Localised, edge-computing solutions and smarter, more efficient HD map use can further reduce cloud-based data processing, making ADAS systems not only more scalable but also more profitable. These advancements are critical to ensuring that future ADAS solutions are both technologically advanced and economically viable for mass-market adoption.